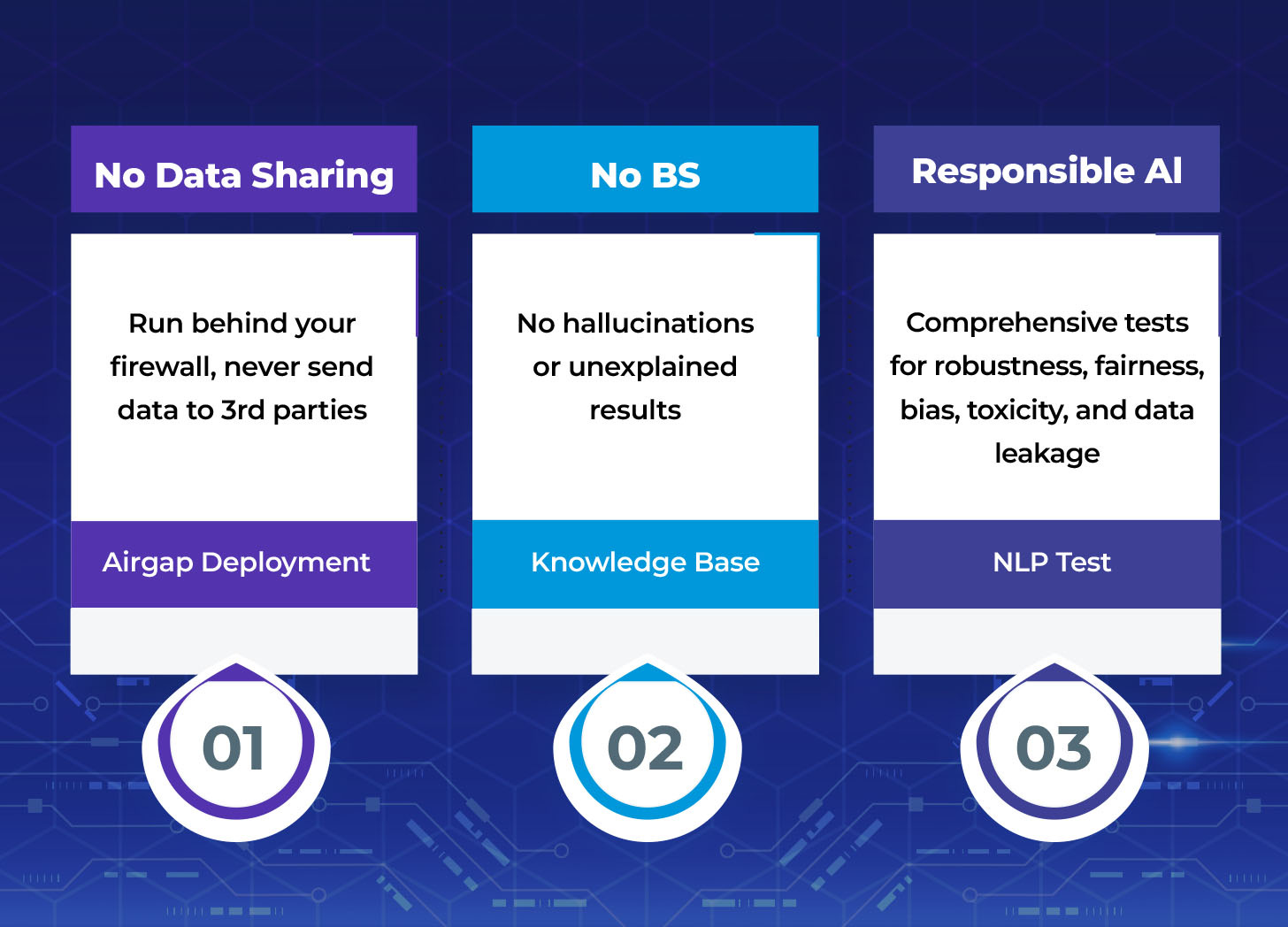

3 Criteria for Regulatory-Grade Large Language Models

Regulatory Grade AI requires transparency, rigorous testing, and privacy protection for AI models in regulated industries. It ensures compliance, accuracy, and safety in decision-making.

Large language models (LLMs) have the potential to revolutionize decision-making and creative processes in many industries. Regarding regulated sectors such as healthcare and life sciences, certain issues, gaps, and limitations exist – and spur the need for a higher standard of AI, known as Regulatory Grade AI. This article aims to define three criteria that make an AI model "regulatory grade" suitable for use in highly regulated fields while ensuring the utmost level of compliance, accuracy, and safety.

The No BS Principle

The first criterion is the "No Bullshit" principle. This simply means that LLMs should be designed in a way that prevents them from generating hallucinations or returning false information. Instead, they should be able to cite the source of any answer they provide.

This feature allows human experts to review the cited source and assess its reliability. For instance, a doctor may receive an answer regarding a clinical guideline from the AI model. If the model cites a study that involved fewer than 100 patients, the doctor can decide not to trust that specific paper, as it may not be sufficiently robust or representative. By providing a transparent trail of evidence, the "No BS" principle ensures that AI-generated information is held to the same standard as any other expert opinion.

Responsible AI

The second criterion for regulatory-grade AI is Applied Responsible AI. This means that AI models should undergo rigorous testing to ensure robustness, bias mitigation, fairness, toxicity reduction, accuracy, representation and prevention of data leakage.

These tests should be executable and presented in a human-readable format that can be easily shared with regulators. By demonstrating a commitment to responsible AI practices, organizations can reassure regulators, customers, and other stakeholders that their AI models are not only compliant but also adhere to the highest ethical and technical standards.

Privacy: No Sharing

The third criterion for regulatory-grade AI is the ability to run privately within an organization's firewall. This ensures that no proprietary or sensitive data is shared or transmitted outside the organization, maintaining security and confidentiality. Systems should be designed from the ground up to work seamlessly in high-compliance, air-gapped environments, protecting organizations from data breaches and other cyber threats.

By keeping data and processing in-house, organizations can maintain control over their information, which is essential for compliance with stringent regulations in fields such as healthcare and life sciences. Note that this criterion does not preclude running in a cloud environment - as long as you control the infrastructure and encryption keys and no one else ever sees your data.

Establishing high standards for AI models is crucial in today's rapidly evolving technological landscape. By developing and adopting regulatory-grade AI, organizations can ensure that their AI-driven decision-making processes are safe and effective. This will ultimately lead to better outcomes for patients, more efficient research, and increased trust in AI-powered solutions across regulated industries.