Why AI Models Crash And Burn In Production

In this article, there will be discussed the common mistakes of assuming that machine learning (ML) models will continue to perform accurately over time.

One magical aspect of software is that it just keeps working. If you code a calculator app, it will still correctly add and multiply numbers a month, a year, or 10 years later. The fact that the marginal cost of software approaches zero has been a bedrock of the software industry’s business model since the 1980s.

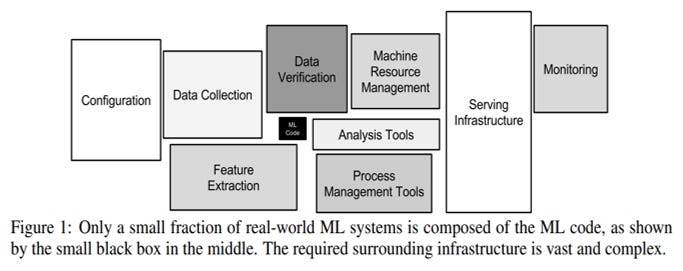

This is no longer the case when you are deploying machine learning (ML) models. Making this faulty assumption is the most common mistake of companies taking their first artificial intelligence (AI) products to market. The moment you put a model in production, it starts degrading.

Why Do Models Fail?

Your model’s accuracy will be at its best until you start using it. It then deteriorates as the world it was trained to predict changes. This phenomenon is called concept drift, and while it’s been heavily studied in academia for the past two decades, it’s still often ignored in industry best practices.

An intuitive example of where this happens is cybersecurity. Malware evolves quickly, and it’s hard to build ML models that reflect future, unseen behavior. Researchers from the University of London and the University of Louisiana have proposed frameworks that retrain malware detection models continuously in production. The Sophos Group has shown how well-performing models for detecting malicious URLs degrade sharply — at unexpected times — within a few weeks.

The key is that, in contrast to a calculator, your ML system does interact with the real world. If you’re using ML to predict demand and pricing for your grocery store, you’d better consider this week’s weather, the upcoming national holiday and what your competitor across the street is doing. If you’re designing clothes or recommending music, you’d better follow opinion-makers, celebrities and current events. If you’re using AI for auto-trading, bidding for online ads or video gaming, you must constantly adapt to what everyone else is doing.

Drift in Healthcare: Faster That You’d Expect

Knowing all this, I still went into a major project a few years ago confident that it wouldn't happen to me. I was applying ML in health care. Specifically, I was predicting 30-day hospital readmissions — one of the most well-studied predictive analytics problems in U.S. health care, thanks to Medicare's Hospital Readmissions Reduction Program.

Clinical guidelines are so stable that a systematic review of best practices for updating guidelines found the most common recommendation is to review guidelines every two to three years. Hospitals routinely use guidelines that are older, such as LACE and HOSPITAL scores, for readmissions.

What did this mean for my major project? A predictive readmission model that was trained, optimized and deployed at a hospital would start sharply degrading within two to three months. Models would change in different ways at different hospitals — or even buildings within the same hospital. In simple terms, this meant that:

1. Within three months of deploying new ML software that passed traditional acceptance tests, our customers were unhappy because the system was predicting poorly.

2. The more hospitals we deployed to, the bigger the hole we found ourselves in.

Why did this happen? ML models interact with the real world. Changing certain fields in electronic health records made documentation easier but made other fields blank. Switching some lab tests to a different lab meant that different codes were used. Starting to take one more type of insurance changed the kind of people who went to the ER. Each of these changes either breaks the features the model depends on or changes the prior distributions the model was trained on, resulting in degraded accuracy in prediction. None of these changes was a software update or interface change. Hence, no one thought it necessary to let us know — or even think about it as a breaking change.

Prepare For Change

Want to avoid this debacle? Here's what you can do instead:

• Online measurement of accuracy: Just as you need to know the latency of your website and public application programming interfaces, you need to know how accurate your models are in production. How many predictions actually came true? This requires collecting and logging real-use results but is an elementary requirement.

• Mind the gap: That is, watch out for gaps between the distributions of your training and online data sets. This is a simple-to-measure, effective-in-practice heuristic that uncovers a variety of issues. If your training data has 50% high-risk patients, but in production, you're predicting only 30% as high-risk, it's probably time to retrain.

• Online data quality alerts: If the number or ratio of the input data changes in an unexpected way, an alert should go to your operations team. Are you patients suddenly older, more female or less diabetic? If you haven't trained your model on those types of patients, you may be serving bad predictions.

Most importantly, keep your best data scientists and engineers on the project after it's in production. In contrast to classic software projects where, after deployment, your operations team handles it and engineers move on to build the next big thing, a lot of the technical challenge in ML and AI systems is keeping them accurate. Automating retrain pipelines, online measurement, and A/B testing is hard to get right. Your strongest technologists will enjoy this combination of intellectual challenge and business impact.

Don't Repeat My Mistakes

You will need to invest in order to maintain the accuracy of the machine learning products and services that your customers use. This means that there’s a higher marginal cost to operating ML products compared to traditional software. Start with the best practices above to successfully scale and improve. Unlike me and many others, you have the opportunity to avoid repeating this common mistake.